The Future in a Chart: Understanding AI Risk Before It’s Too Late

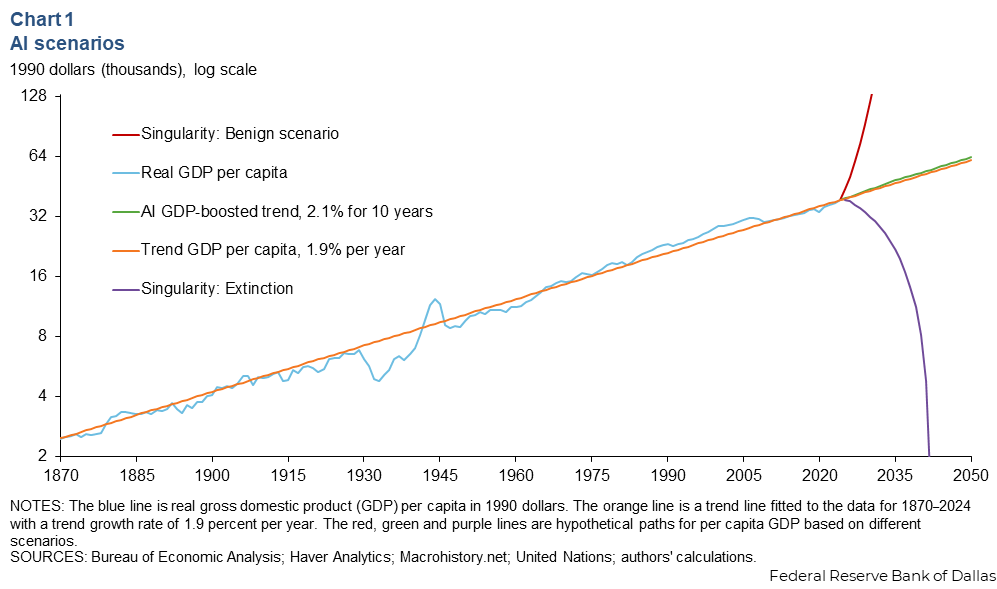

We’re living through a technological revolution that could end in utopia, normalcy, or extinction. If that sounds dramatic, consider this striking chart from the Dallas Federal Reserve that maps three wildly different futures based on how AI develops. One line shows modest GDP gains. Another rockets toward post-scarcity abundance. The third plummets to zero—representing human extinction from misaligned AI. This isn’t science fiction. It’s economic modeling from one of America’s most conservative institutions.

The Godfather of AI Issues a Warning

In this essential interview with Jon Stewart, Geoffrey Hinton—the “Godfather of AI” who won the 2024 Nobel Prize in Physics for pioneering neural networks—explains why even their creators don’t fully understand these systems. When Stewart asks if AI systems can make decisions based on their own experiences, Hinton replies: “In the same sense as people do, yes.” Hinton believes that AI will develop consciousness and self-awareness, and when asked if humans will become the second most intelligent beings on the planet, responds simply: “Yeah.” This isn’t speculation from a fringe theorist. This is the man whose work made modern AI possible, and he left Google specifically to warn about what he helped create.

Why the Engineers Are In Over Their Heads

If Anybody Builds It, Everybody Dies: Why Superhuman AI Would Kill Us by Eliezer Yudkowsky and Nate Soares exposes a disturbing paradox: the people who best understand AI’s existential risks—including OpenAI’s CEO, Google DeepMind executives, and Turing Award winners—are the same ones accelerating toward them in what the book calls a “suicide race.” The engineers building these systems are brilliant and well-meaning, but they can’t explain what happens inside large language models or control what these systems ultimately want. Yudkowsky himself inspired OpenAI’s founding, a move his own institute now views as having “backfired spectacularly.” The book’s core warning: by the time the danger becomes obvious to everyone, it will be too late.

Policymakers Are Starting to Ask Questions

While researchers warn about existential risk, Senator Bernie Sanders is addressing AI’s immediate economic impact, warning that nearly 100 million U.S. jobs could vanish within a decade. His Senate report proposes solutions including a “robot tax” and reduced work weeks. Whether you agree with specific proposals or not, the fact that policymakers are finally engaging with these questions matters—because the people building AI are telling us they don’t have all the answers.

The Dallas Fed chart shows we’re not choosing between similar futures—we’re choosing between radically different outcomes for civilization. Watch the Hinton interview. Read the book. And demand that the conversation about AI’s future includes more voices than just those racing to build it. The window to get this right is closing.